Exploring what “responsible technology” means

At Doteveryone, we’d like to see technology become more responsible — in terms of how it’s developed, how it works for users, and how it affects society. So as part of our work to help Britain lead the world in ethical tech, we’re exploring what “responsible technology” means and how we can encourage more of it.

Responsible technology is important for delivering a fairer internet experience, and a positive future where tech is useful, trusted and trustworthy. We need to have technology developed and maintained which supports this vision, and to drive this requires making ethical and responsible activity not just more worthy, but easier and more valuable too.

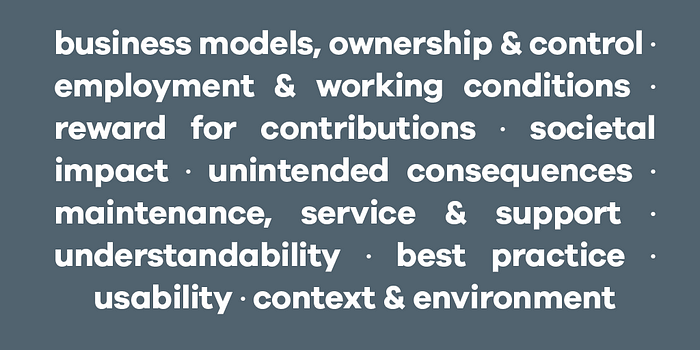

A big part of our work in 2017 (and beyond) involves figuring out how to drive more ethical, responsible and appropriate digital technology development and operation. To kick that off, we’ve come up with a draft set of ten areas responsible tech should consider. About half of them are specific to digital, while the other half are broader aspects of responsibility that could equally apply to other organisations. In other words, our list isn’t just about technology itself — it’s about the people who develop, manage and invest in tech, about users, and the wider context too.

We think it’s useful for the foundations of responsible technology to be somewhat future-proof, and not too specific about individual technologies. (That means there’s no mention of algorithmic bias or advertising-backed platform monopolies, or autonomous vehicles, or anything else like that.) Instead, we hope these specifics would be covered by the general ideas below, and as this work develops we expect to refer to good work being done in some of these specific fields.

Here’s a look at where we’ve got to so far — aspects of responsibility for people and organisations developing, contributing to and operating products, services, infrastructure and platforms using digital technologies. These are fairly broad areas, and we’ll develop them more in the coming months.

1. Business models, ownership and control

The business model and organisational structure should be appropriate for the tech in question, and the value given and received by different stakeholders should be reasonable. Organisations should be established in appropriate locations, and should of course follow local and international law, pay their taxes, etc.

2. Employment and working conditions

Everyone involved in producing tech should have fair pay and conditions, and work in environments free from exploitation. Workplaces should be inclusive in terms of gender, age, ethnicity, etc. CEO to worker pay ratios should be reasonable. All the above should apply to the supply chain, including subcontractors, hosting providers and so on, not just those directly creating or operating the tech in question.

3. Reward for contributions

Services that use people’s labour or information should reward those people fairly for their contributions. (This could include anything from data analysis to microtasking to Google’s “I’m not a robot” reCAPTCHA.) Rewards could take many forms — pay, shares, in kind benefits, discounts, etc. — but should be fair for the value generated by the information or effort contributed. Materials which are reused should be attributed appropriately.

4. Societal impact

Technology should add something to the world — or, at the very least, not take anything away. In addition to a product or service’s actual function, organisations should consider contributing to public or commons infrastructure, and their impact on public services.

5. Unintended consequences

Not all risks can be avoided, but they should be anticipated, and actions to avoid them — or to mitigate their consequences — should be planned. During both design and maintenance, systems effects, side effects, and potential harms for different people, stakeholder groups or the wider environment should be considered. This should not be limited to what happens if things go wrong; plans should also include what happens if a product or service becomes overwhelmingly successful.

6. Maintenance, service and support

Responsible technology needs to work tomorrow as well as today. All products and systems should offer help and support to users, and offer service including access to necessary updates for a reasonable period, and graceful degradation when necessary. This means that at the design stage there should be consideration of what happens to customers if the product or service doesn’t take off, or if the business fails, or if the business is acquired by someone else.

7. Understandability

People should be able to easily find out and understand how a product or service works. This includes clear, understandable terms and conditions, but goes beyond that; costs, service levels, and specifics such as data sourcing, storage, management and sharing, etc., should all be accessible and comprehensible. Users should understand how to raise concerns or complain about the service, and know what to expect if something goes wrong or changes.

8. Best practice

Responsible technology is useful technology, that interoperates with other things as far as possible and is designed for real people and situations. In whatever technology is being developed, appropriate standards and best practices should be used, and any particular technology-specific guidelines around ethics should be followed. To ensure the technology is useful, good design practices such as human-centric design and systems design should be used throughout development, testing and operations. Depending on the product or service, sustainability considerations may also be relevant — that might mean hardware designed for reuse, repair or recycling, or energy use. Reusing appropriately licensed code is also good practice!

9. Usability

Especially if a broad range of users are expected, or if people will be compelled to use a product or service, accessibility and support should be appropriate. This includes conventional accessibility considerations — for example, designing for screen readers — but goes much deeper. Does a product or service work for someone with mental health issues, or memory problems? Someone who relies on a carer? Someone who does not have access to a smartphone, or an old phone, or limited or filtered internet access? Enabling support staff to work around exceptional cases is key.

10. Context and environment

Nothing operates in isolation. The context technology operates in should be appropriately considered and addressed — including who may use or encounter it, and how it may be interpreted. A service that offers support and guidance should be clear that it’s offering advice, not dictating choices; ambient home technology should consider what happens if it’s used by children, guests, etc.

By working to create a set of clear and actionable guidelines, we’re hoping to start conversations about making more responsible digital products and services, and to find opportunities for different ways we can encourage more responsible technology.

If you have thoughts on this framework, or if you’d like to make comments or additions, we’ve opened up a doc for feedback. We’re also holding an event in partnership with Co-op Digital on 20th June to discuss ethical digital products and services, so please join us — or contact Laura if you’re interested in collaborating with us more deeply to develop these ideas, or to find ways to encourage more responsible and ethical technology.