First, Do No Harm: Taming the Gods of the Anthropocene

In the UK in 2017, there’s a sense of depth and complexity in every moment: not only are we finding our way in an unknown political and economic landscape but technology is constantly shifting our personal and social contexts.

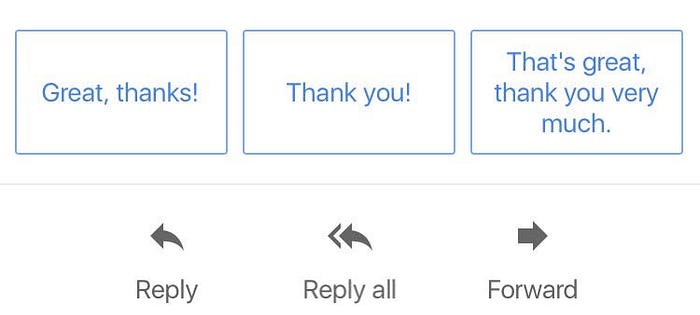

On a personal level, we’re used to hopping on and off free wifi while vaguely worrying about getting hacked; Facebook ads are sometimes so uncanny we think our phones might be listening to us; every parent is worried about the content of their kids’ Snapchat. Globally our culture is being shaped by a small number of companies in Silicon Valley, and we’re mostly too busy or too preoccupied to draw the many complicated lines we need to understand if those uncanny Gmail auto replies have anything to do with games of Go.

And because we can’t readily understand what Google or Facebook wants to do, it’s easy not to try: it’s easy not to stress about a corporate business plan if you can’t afford a place to live or are worrying about a possible missile launch.

One reason things feel so gloopy — so hard to understand — is we don’t really know how to talk about them. The movements and ideologies that we’ll eventually come to use as shortcuts aren’t yet formed enough to point to. And when we talk about technology we don’t quite know what we’re trying to describe. Rather than thoughts or feelings or political opinions or moral perceptions, we’re suddenly talking about hardware or software or a robot we’ve seen in a movie, or some weird mixture of all three. We can’t say right now what the two sides will be in the Great Technology Wars of 2060; who’ll start the revolution against who, and why, or whether something is “good” or “bad”. We mostly just know some stuff is happening and that it’s complicated.

Can my employer ask to see my Facebook posts? Is my healthcare affected by automated decision making? Can the government read my text messages? Why does my taxi company know where I go when I got out of the car? Will my job get automated? Should I learn to code?

There are thousands of questions like this, and not many good answers.

I’ve spent a lot of the last year researching and trying to understand how the big platform businesses are changing our lives. And it seems possible that, maybe, Jeff Bezos, Mark Zuckerberg, and Eric Schmidt aren’t just being secretive: they may not know where their stories end. Perhaps none of them have the kind of logical, long-term goals we expect; they’re all dealing in potential — making bets with themselves that everything can be mastered, understood and owned, and setting aside the realities of human and environmental interference and unintended consequences. This sort of mystical focus wouldn’t have washed with Mr Spock; it’s mystical not logical, a belief state rather than a business plan. What if Bezos, Zuck, Schmidt, Elon Musk, Tim Cooke and Travis Kalanick all just believe — in themselves, in technology, in the capability of their companies and their products? What if their future-facing networked capitalism is driven by the most predictable and traditional kind of change of all: religion?

Because it all makes more sense if they’re acting in faith: faith that general intelligence can be achieved, in space travel, in reinventing citizenship. None of these are logical, probable, or necessary, but they’re possible in the same way as miracles are. If Elon Musk, Jeff Bezos, Mark Zuckerberg, Eric Schmidt and the others are the new Gods of the Anthropocene then the credo of their followers is that technology leads and humanity follows.

This is leaps away from Steve Jobs’ barefoot Californian entrepreneurialism or the laissez-faire optimism of “open” technology. It’s Exponentialism — a belief that technology and ingenuity will triumph over possibility, and that progress will take an ever-sharper trajectory.

Elon Musk’s vision unfolds over Twitter like the storyboard of a Bruce Willis film; Jeff Bezos has a home exoskeleton; if Mark Zuckerburg isn’t running for president, then perhaps he’s walking among the people like Jesus, and some day soon we’ll be asked to swipe our phones on the chip embedded in his hand. They’re a motley gathering of man-gods and the Ts&Cs we’ve signed along the way aren’t just enabling a technical and economic revolution, but a spiritual one too.

At Doteveryone, we’re working on ways to make technology more “responsible” — more aware of its consequences and engaged with society. To do that, we need to get to a shared idea of what is reasonable: something that not only works in the context of our national laws and the global Internet, but that also chimes with a shared moral compass. Before we can get to the technology equivalent of “First, do no harm” we need to agree what harm is, what won’t be done, and what the penalty might be. If Heaven is the Singularity, what does Hell look like?

Exponentialism is limitless. Rules are there to be broken, norms and expectations defied. But just because we can, doesn’t mean we should, and just because we liked a picture, posted a video or clicked on a targeted ad doesn’t mean we’ve entered into a binding contract with an invisible moral code.

But where to start? What governance should be in place for the neurological consequences of letting algorithms complete our sentences, the social consequences of devaluing different kinds of people, the apocalyptic consequences of letting wars begin on Twitter, the democratic consequences of censorship, and the slow erosion of free-will that comes with automating everything?

Trump’s bonfire of American politics means legislation won’t begin at home, and EU legislation (probably the best chance we have) will be finite and biased against Western capitalist norms — notwithstanding, of course, the fact that laws are there to be “disrupted”. In the UK right now, our government is so removed from the real moral and ethical challenges of technology that it’s trying to ban end-to-end encryption.

So if the law can’t help us, how can we usefully respond to Exponentialism? Can we agree that not everything needs to be finished, that the UN Sustainable Development Goals are a good enough set of challenges for humanity, and that — to paraphrase the Agile Manifesto — we all agree on “People before Products”? For humanity to come out on top, we don’t all need to learn to code, but we do need to understand what’s happening to us and set some boundaries and consequences. The 2 billion people on Facebook deserve the same protection as any other nation, and should get the same opportunities to rebel. It’s not so far-fetched to propose the governance of Google, Apple, Facebook and Amazon should pass to the UN Human Rights Council, but it’s hard to know what skills and understanding that group will need to make a good job of it.

Of course, regulating the big platform businesses is just one part of the puzzle, but it’s an important one. Improving global political understanding of the consequences and potential of technology, now and in the near future, will give us the chance to enshrine the freedoms and protections we have come to expect in free and democratic society. Prevention is better than undoing what is already done.

In the meantime, as an industry — as designers, developers, product managers, board members, VCs, you name it — we could start by thinking ahead a little and agreeing to do no harm. Just because it’s really cool, doesn’t mean it always needs to happen. After all, no one really wants their great-grandchildren’s lives to be like one of the less good Star Wars films.