The public shaping the impacts of tech

AI is no longer a niche subject. Its pace of development is increasing rapidly and affecting all parts of our lives and society. Even decisions made by governments are becoming increasingly automated, like using algorithms to screen immigrants and allocate social services.

This makes it vital that, as citizens, we have the ability to be able to interrogate these systems and feel able to hold them to account as they are affecting our lives and the society we live in.

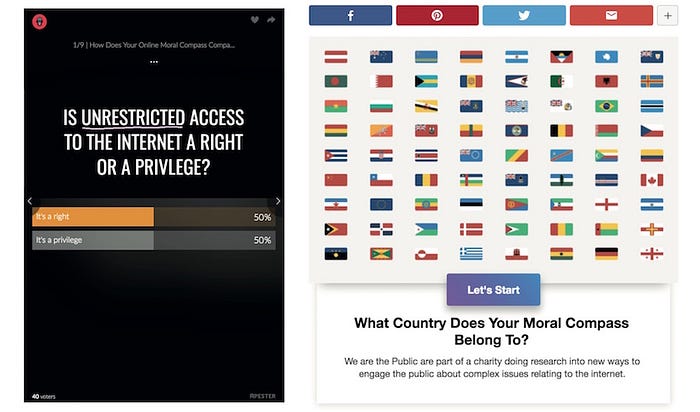

Whilst we may ask the public their opinions on technology, opinion changes according to how the questions are asked. For example, YouGov recently surveyed Britons about their support for the introduction of various technologies that are argued to be interventions on our civil liberties; ID cards, CCTV cameras monitoring all public spaces, a database for all British citizens’ fingerprints. They found that public favour for such interventions shifts according to the context in which the technology is considered. So they found that 55 percent of Brits support a legal requirement on phone manufacturers to unlock a password-protected phone, this then increases to 64 percent for the purposes of tackling crime and 68 percent for combatting terrorism.

Asking people what they think about technology, and its impact on our lives is important but asking in the right way is important too.

So for the past few months Doteveryone (as introduced in this blog) has been exploring public engagement: its methods, processes, outcomes and opportunities for innovation. We wanted to explore:

- What does 21st Century public engagement look like for complex internet issues?

- How can technology make public engagement more modern?

- What are the systemic issues 21st Century engagement must consider?

These are definitely not the kind of questions that can (or should!) be answered in a single round of research. We will be sharing our learning and key insights from the public engagement prototypes we developed in a follow-up post, covering what methods of public engagement are currently in use and how effective they are, as well as how people’s level of engagement and understanding varies according to different forms of engagement. Here we focus on the overarching findings from the work. This includes:

- What could an alternative form of public engagement, that aims to mitigate some of these issues, be?

- What are some of the unanswered questions?

- What needs to happen going forward?

What we learnt — current issues with public engagement

After some investigation which included a landscape analysis identifying as many categories of public engagement as we could (e.g. co-design vs citizen juries vs exhibitions) and as many types of digital public engagement (e.g. games, trackers, polls), and speaking with a range of experts*, we’ve concluded that the following are some of the most significant issues with existing public engagement methods:

Public engagement can be shockingly expensive

For example, a two-day set of citizen juries can run anywhere from £20,000 to £50,000. That’s because they involve a lot of work to organise, plus allocated costs like participant incentives and travel reimbursements.

It doesn’t always work at scale

When a method relies on being together physically, like in co-design sessions or focus groups or exhibitions, the number of potential participants immediately dips. People may not always be in the right geographical location or have a flexible enough schedule to participate, limiting the type of data it’s possible to collect.

It doesn’t always gauge opinion shift

For example, a participatory budgeting tool can help citizens learn more about how funding levels affect service delivery. But tools like these can only track a user’s final choice, not how they came to make that choice. Many of the engagement platforms are good at capturing very surface level opinion, without ever getting deeper into the trade-offs and challenges inherent in making decisions in complex systems.

We don’t need more new creative methods

There is a lot of discussion about how we do public engagement and not very much discussion about the why. The methods are rarely the problem. So rather than just doing it (public engagement) for its own sake, we should focus on asking what are our motivations for doing it?

What comes after the engagement?

You’ve invited the public in and empowered them in the discussion. You’ve generated the message that others can be involved. What happens next? How do public institutions then respond to what people think?

“Established organisations can be creaky and slow to change, hoarding power at the top when people want them to be more contemporary, responsive, engaged, and where appropriate more fluid.”

Tech issues are harder

There are particular issues to do with science and technology that make public engagement particularly hard, because it is seen as hard. It’s easy to say “you don’t understand it therefore you don’t need to be part of the discussion.” The good news stories have tended to come from health — people care about health, their lives are at risk, people don’t currently connect in the same way to issues of the internet.

“You don’t need to be able to code, to understand power and accountability.”

There are some good precedents emerging

For example, DeepMind’s ethics panel shows that it is possible for organisations to be proactive in being reflective about their ethical practice and start behaving more responsibly. The Citizens Juries that they’ve commissioned the RSA to run, are a good starting point in opening up a window and enabling the public to contribute to a bigger discussion.

What’s the starting point?

We need more information out there, around the issues of tech and AI, to raise the public consciousness for public engagement to be more effective. The recent publicity around Facebook has helped. But we also need the public to have a better digital understanding before we can expect them to meaningfully engage. Our most recent People, Power and Technology report shows there is currently low levels of public understanding around digital technologies.

How can we make public engagement more effective?

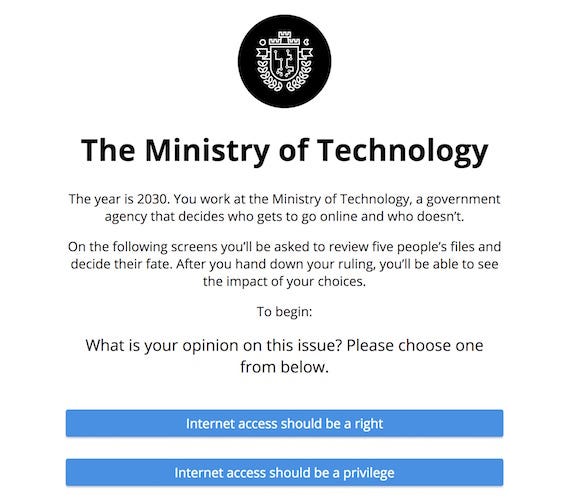

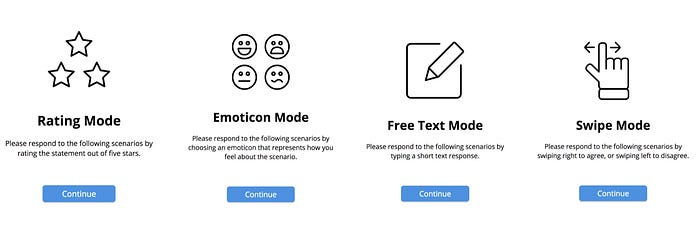

In our initial experiments (detailed in our next post) we were able to test out different methods, especially to understand the types of information, content design and interaction that can influence how people make decisions. Through these we gathered data from different sources — online quizzes, forum posts, scenario games, opinion polls.

However, these initial digital prototypes were only ever going to be a starting point. We know some of the challenge of doing meaningful public engagement is the fleeting nature of it (people’s feelings and opinions change, and how they make decisions changes depends on context), as well as a lack of opportunity for scaleable discussions that uncover broader values, and not enough visibility of outcomes (a feedback loop) — we felt that digital affords more than has been utilised so far by the public engagement community and so set out to explore what a dynamic, feedback loop could look like between the public, government and industry.

What if? — a story to accompany a speculative prototype

**This is an imagined scenario to explore what a ‘public engagement system’ could look like.

**The Home Office wants to utilise automated decision making within its immigration service to determine whether or not an applicant has cheated on an important test, with the intent that cheaters should be deported.

In order to consult the public’s opinion on this matter, the Home Office’s Digital Innovation team (HODI) decides to utilise a public engagement tool to put out an inquiry on this matter.

Public inquiry — round one

The first round of inquiry aims to discover whether the automated analysis of voice samples, to decide if there was cheating in tests, is acceptable in the context of immigration/deportation decisions. Participants can respond via a simple Yes or No response. They can also raise questions for future rounds of inquiries.

After deploying the first round of inquiry, the team can view and review the participant feedback on the technology and its usage in context via a dashboard, such as shown below. In response to participant feedback, the HODI team review their plans and make any modifications if necessary.

Public inquiry — round two

The HODI team then deploys the second round of inquiry to their participants. Based on questions raised by participants in the first round, and with some expert input, the team wants to discover the public’s view on three specific aspects of automated decision making:

- Data — what information is used to make the automated decision and where it comes from

- Algorithm — the logic that determines how the automated decisions are made, who designed it, and what transparency there is about how it operates

- Output — how the decision is implemented, and the implications of this automated decision and governance

In this second round, participants can respond via a slider response (ie. strongly disagree to strongly agree), and again raise questions for future inquiries.

You can explore the prototype for the second round of inquiry here.

The team again collects and analyses the feedback displayed on their dashboard. They are able to see the variety of opinions across the three areas, and in response, they prototype a trial run of the automated decision making system that takes into account any areas of concern or potential risks and opportunities raised by participants. The team carries out a trial run and collect the results.

Public inquiry — round three

The team undertakes a third round of inquiry based on previous responses and the results of the trial run. This round aims to discover the public’s response to the practical results of the trial and how that differs from their previous responses. Where possible a control system or additional checking is used as well.

Participants can view the method used in the trial and its results. Was it accurate? What were the errors? Did the automated decision work as intended? Were there any detected and uncorrected biases? What was the number of false positives and false negatives?

They can then respond again on whether they feel automated decision making is acceptable in this case, share opinions on the three areas, and raise further questions/perspectives.

The HODI team once again collects this feedback, and with the results of the public consultation period and the trial, decide whether or not to implement automated decision making within immigration testing.

If they decide to go ahead, they will determine what governance and systems might be needed but also inform the public about how their participation has influenced the new practice / policy change.

How could this be useful?

“The field needs more work to find evidence about and clearly explain how important systems work in practice. This work can help build the case for new policies and technical requirements.”

Building buy-in amongst policymakers and politicians. Even the most elegant public engagement can struggle to get pick up from actual decision makers. Could a system like this become front and centre of people’s minds (and therefore decisions), helping to inform a set of principles to guide policymakers’ and technologists’ decisions as they develop policy or technology?

A more meaningful, ongoing and cumulative view of what the public wants. Building up a picture of what the public wants, over time, that could directly feed into policy and industry decisions, or taken on by the social sector and civil society as a collective sector response . Could this be a way for civil society to have greater legitimacy in holding tech accountable?

“ Policymakers and the public must think more concretely about what “fairness” and “accountability” ought to mean in particular social contexts……..We need debates about values more than technology.”

What else do we want to know?

Throughout this process of researching and prototyping, we came across a number of really interesting issues that, for lots of reasons, weren’t appropriate to test at this stage of our work. But in coming phases, we’d also like to look at:

The motivations behind public engagement. Is there some sort of tool or best practice that could help practitioners gauge their own motives for carrying out public engagement — to realise the difference between want, need, and should?

Semantics. How does public perception change according to the terms used? “Artificial intelligence”, for example implies certain things (i.e., that a human-like “intelligence” fundamentally exists. Do responses change if you use alternative terms like “machine learning”, “Algorithms”, “Really fancy Excel spreadsheets”?

Mitigating unintended or cumulative effects. What can we do to address the imperfections of the various public engagement methods that exist? Like the fact that citizen juries favour argument over cooperation, that representative sampling makes people bear witness for entire minority groups (“OK, you’re our one Bengali, what do Bengalis want?”) and that online forums lack the nuance and nonverbal insight of in-person events?

The missing perspectives. And who isn’t included in these methods and how do we know which perspectives we are missing?

Acknowledgements and thank you

Since we started this work in January, it’s been encouraging to see Nesta now launch their new public engagement programme offering grants to projects that demonstrate creative ways of engaging members of the public in issues relating to innovation policy, which builds upon their blog post that considers how to involve the public in the development of AI.

We’re also looking forward to the work that will come out of the partnership between the RSA, DeepMind and Involve, which will use Citizens’ Juries to encourage and facilitate meaningful public engagement on the real-world impacts of AI. Our Director of Policy, Catherine Miller is on the advisory board.

And we will be keenly following the work of Imran Khan’s Public Engagement team at the Wellcome Trust who are asking really important questions about how public engagement needs to evolve.

With thanks to Richard Wilson, Director at OSCA and founder of Involve, Dr Jack Stilgoe, Senior Lecturer at UCL, Giles Lane at Proboscis and Sarah Castell, Head of Public Dialogue and Qualitative Methods at Ipsos MORI for their insights and expertise on public engagement.

*The experts we consulted with in the initial stages of the work included: FutureGov’s Emma McGowan, the University of Manchester’s Malcolm Oswald, CrowdfundUK’s Anne Strachan FRSA, M&S’s Mike Grey, Tactical Tech’s Alistair Alexander, UnBias’s Angnar Koen, Horizon Digital Economy Research Institute’s Dr Helen Creswick, and artist Giles Lane.

And a reading list:

Early lessons and methods for public scrutiny- http://omidyar.com/sites/default/files/file_archive/Public%20Scrutiny%20of%20Automated%20Decisions.pdf

Civic thinking for civic dialogue- https://gileslane.net/2018/03/14/civic-thinking-for-civic-dialogue/

Is citizen participation actually good for democracy- http://blogs.lse.ac.uk/politicsandpolicy/is-citizen-participation-actually-good-for-democracy/

The public is helping make policy — how much power should they have?https://apolitical.co/solution_article/the-public-is-helping-make-policy-how-much-power-should-they-have/

Data for public benefit- https://www.carnegieuktrust.org.uk/publications/data-for-public-benefit/