Reining in lawless facial recognition: Doteveryone’s perspective

Facial recognition technologies promise a wide range of applications, from identity verification to the detection of criminals. But a growing track-record of worrying misuse is leading many to question if the technology should be banned outright.

In the UK its use by the police is being challenged in the courts, with calls for an outright ban on its use, while cases of inaccurate, biased and dubious applications mount.

This post outlines the considerable challenges caused by facial recognition technology, and gives Doteveryone’s perspective on how to make the technology accountable and responsible.

Read the full briefing paper on facial recognition.

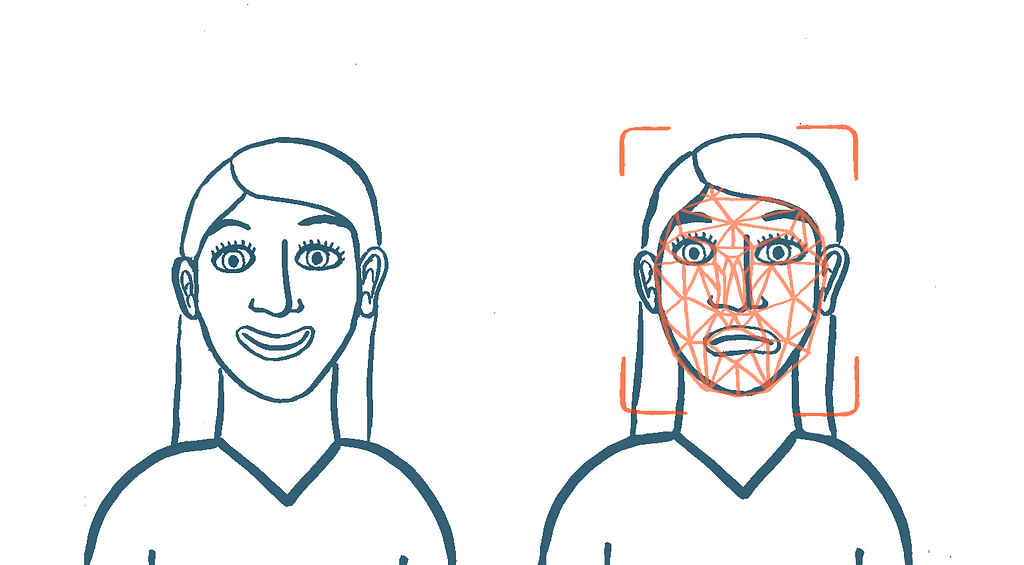

What is facial recognition?

Facial recognition technologies (FRT) identify and categorise people by analysing digital images of their faces. The most advanced forms typically use artificial intelligence to map an individual’s facial features — then compare this map to a database to look for a match, or to categorise the individual on the basis of inferred characteristics such as age, gender, ethnicity or emotion.

Whilst in the UK most of the public debate gravitates towards worries around the police’s use of the technology to detect suspected criminals and people of interest, the potential uses are much broader. Police in New Delhi, for example, have used FRT to track down 3,000 missing children in four days, it can help the visually impaired to read the emotions of people they meet and has even been used by farmers to track runaway pigs.

But these positive examples are offset by a worrying track record of inaccuracy and bias, hyper-surveillance of the public and rights violations.

Research by MIT on Amazon’s industry-leading Rekognition software uncovered much higher error rates in classifying the gender of darker-skinned women than for lighter-skinned men. The Chinese government has used the technology to exclusively profile, track and persecute Uighur Muslims, whilst a host of tech giants are currently engaged in a race to the bottom to hawk their FRT surveillance systems to the UAE — where the technology’s potential to enable human rights abuses has been described as “absolutely terrifying”.

Oversight of facial recognition: a broken picture

In the UK oversight of FRT is spread across a disjointed patchwork of regulators and legislation, including the Information Commissioner’s Office (ICO), Surveillance Camera Commissioner (SCC) and — where the police’s use of FRT is concerned — the Biometrics Commissioner and Investigatory Powers Commissioner’s Office (IPCO).

In June 2018 the Home Office published their long-awaited Biometrics Strategy, including a commitment to establish a new advisory board to consider the law enforcement’s use of FRT.

But in a recent parliamentary debate, MP Darren Jones summed up the widely negative response to the strategy, saying “the Biometrics Commissioner, the Surveillance Camera Commissioner and the Information Commissioner’s Office have all said exactly the same thing — this biometrics strategy is not fit for purpose and needs to be done again”.

There are a number of key unanswered questions facing FRT, outlined below, alongside Doteveryone’s recommendations for dealing with them.

Where do the public feel FRT is unacceptable?

Whilst there are ethical trade-offs to using all technologies, where FRT is concerned the stakes could not be higher: the benefits must be weighed against a complex set of privacy, corporate and state surveillance and security risks. But these decisions are currently being made by government and corporate organisations alone, out of view from the public and beyond the democratic process.

Facial recognition technology is not an inevitability, and it must be the public that decides if, where and how it is used.

Recommendations:

We’re calling on the Biometrics Advisory Council to lead a review of FRT laws immediately, and on the ICO to lead an extensive series of public debates to understand where the British public believes the use of FRT is simply unacceptable. When individuals and communities are exposed to these technologies, they must be given a genuine choice to opt-out of FRT systems and facial image databases, and be able to seek redress where their rights have been violated.

Until these protections are in place, ALL use of live automated facial recognition technologies should be banned.

How can the UK’s public sector be held accountable for their use of FRT?

Civil liberties groups Big Brother Watch and Liberty are standing up for the rights of the public and taking the UK’s government and police to court for their use of FRT. But with the legal system seemingly acting as the only, as opposed to the final, avenue for challenging public sector misuse of FRT, the rest of the UK’s regulatory system must catch-up.

Recommendations:

We believe that the ICO, SCC and Biometrics Commissioner should establish a joint Code of Practice for FRT based on the public consultation and review described above.

And to ensure local authorities don’t buy inaccurate FRT or hand over too much power to the tech companies commissioned to develop these systems, we need mandatory procurement policies for the public sector.

How can we encourage international action on FRT?

We must encourage responsibility on a global scale

The British public has picked up on suspect FRT services on a number of occasions, including the Russian Findface app which lets users identify people based on their facial image without consent. A Chinese programmer recently developed an openly-available tool to help paranoid partners check whether their girlfriends have ever acted in porn, using FRT to cross-reference adult video footage with social media profile pictures. The tool, predictably, does not work on men.

As these examples show, no country or community is totally immune to the risks of FRT developed beyond their borders. We must encourage responsibility on a global scale, but as San Francisco’s recent facial recognition ban shows, we don’t have to wait for national governments to act.

Recommendations:

We are calling on cities and local authorities to establish a Responsible Facial Recognition Coalition, to share good practice around oversight of FRT and publicly commit to using the technology responsibly, if at all.

Respected international bodies should also play their part, and the UN should issue a General Comment to clarify where FRT breaches the UN Human Rights Convention.

Read the full briefing on our website.

We will be championing these recommendations in the coming months. Get in touch if you can help or have any questions or feedback via [email protected].

Reining in lawless facial recognition: Doteveryone’s perspective was originally published in Doteveryone on Medium, where people are continuing the conversation by highlighting and responding to this story.