To make tech accountable for online harms we need to harness each part of the system

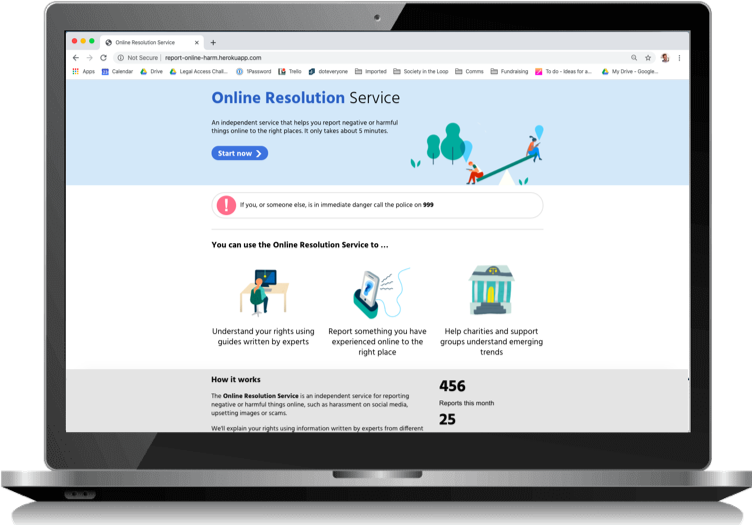

For our Better Redress project we’ve looked at what the public needs when things go wrong online and prototyped the Online Resolution Service. It’s a clear and easily accessible place where individuals can seek redress for online harms they may have experienced – from phishing to online abuse to cyberbullying – in the form of complaints, compensation, and remedies. It aims to help right the power imbalance between tech companies and individuals by empowering people to hold them to account when their digital rights have been breached.

The Online Resolution Service

But in order to build a robust online system for redress, it is important to include the full range of different stakeholders in the online world. There are charities and support groups that help the public with harms they experience online; businesses that are on the receiving end of the public’s complaints, from ‘Big Tech’ companies with thousands of employees, to smaller SMEs and startups; and government and regulators, that are developing new legislation for a digital age.

The incoming Online Harms legislation will include provisions to improve the way tech companies handle complaints and if the legislation is to be implemented effectively it will need to take account of the role each part of the system can play.

We’ve been focusing on small businesses and charities, along with mapping the civil society landscape online. We have interviewed 16 SMEs, SME membership bodies and civil society groups and here’s some of what we found:

Building an online redress service to get the most out of support provided by civil society organisations

Civil society groups already support people to deal with the impacts of technology in a variety of ways. There are from helplines, and online resources for individuals, as well as campaigns and advocacy to drive wider change. But there are distinct silos covering specific types of harm – for example child abuse or racial or religious hate speech – with little collaboration between the silos, meaning members of the public may fall between cracks when they need help and support. The complexity and pace of the online world means organisations need to help more people than ever before. Organisations recognise these silos can pose a problem and that together they could cover more ground, but there is so far no mechanism to bring them all together.

We realised the Online Resolution Service could allow civil society organisations to come together and share the most up to date guidance and support for a variety of harms online in a single place.

We designed the civil society facing side of the Online Resolution Service in a wiki style so it’s easy to contribute guidance and support that can be constantly iterated and added to in order to match the pace of the online world.

Charities told us that they needed better evidence about the impact that tech-driven harms can have on people and how prevalent they might be so that they can provide better support and advocacy. In response, we’ve adapted the prototype to generate topline statistics about different categories of problems that the public encounter which will be fed through to the relevant organisations.

Civil society is already supporting the public in many different ways, but by bringing them together we think we can maximise their impact.

Building an online redress service to work for SMEs

When we spoke to small businesses and the membership bodies that represent them, it became clear that there was not only a strong desire to be responsible, and to comply with regulation, but also to build and maintain trust with their customers.

But they need some help to do so.

They were concerned that the incoming online harms legislation was targeted at Big Tech, and it would have an unfair and disproportionate effect on smaller companies. The major tech companies have the time and financial resources to manage incoming regulation, whereas SMEs generally don’t have the head space to consider the impact of legislation that might not come into force for some time.

There are many similarities between how companies perceive the planned online harms regulation with the introduction of GDPR. Back in 2018, many businesses were not aware of what GDPR meant for their business, and whether they were even in scope. For those who did understand the regulation, there were difficulties in knowing how to apply it practically to their business. In practice this meant that there was widespread confusion and panic on the 25th of May 2018 when GDPR came into force. There are lessons from GDPR that could mitigate similar problems when implementing online harms legislation.

Support for SMEs must be practical, it must be simple to use and allow them to focus on what is important – their product. We’ve incorporated SME’s concerns into the Online Resolution Service. It will provide them with better statistics to understand their users, and it will organise and filter the complaints they receive so they do not become inundated with malicious or frivolous complaints. By making sure that people’s problems are directed to the right place, businesses will only see the complaints they need to react to. This will empower more companies to act responsibly, and to improve their products to reflect the needs of their users.

Changing the culture around seeking redress

Doteveryone’s forthcoming People, Power and Technology 2020 research reveals that 50% of the UK population believe that “it’s just part and parcel of being online that people will try to cheat or harm me in some way” and 67% said that “people like me don’t have any say in what technology companies do.”

Faced with this high level of cynicism and disempowerment we continue to believe, as we wrote in Doteveryone’s Regulating for Responsible Technology report in 2018, that: “if technology is going to earn trust, it’s vital that the public are able to hold to account the technologies that shape their lives. If not, the current cynicism may grow to an ingrained opposition to all innovation and missed opportunities for society and the economy.”

Redress is an important lever for the public to hold technology to account, but it must be a system that produces good outcomes, and does not lead the public down dead ends. So along with making the redress process simpler for SMEs, bringing together civil society organisations and ensuring the government is building its online harms legislation which accounts for their needs, we also need to see a change in the culture around seeking redress online. We need to create a movement which normalises complaining about harmful experiences online. And this has to be underpinned by a system that takes into account the needs of each part of the online world.

By building a system which works best for each stakeholder involved, we create something which better serves the public and ensures we’re participating in an online world which is working for everyone.